mirror of

https://github.com/ggerganov/llama.cpp.git

synced 2026-02-05 13:53:23 +02:00

android: fix missing screenshots for Android.md (#18156)

* Android basic sample app layout polish * Add missing screenshots and polish android README doc * Replace file blobs with URLs served by GitHub pages service.

This commit is contained in:

@@ -1,27 +1,27 @@

|

||||

|

||||

# Android

|

||||

|

||||

## Build with Android Studio

|

||||

## Build GUI binding using Android Studio

|

||||

|

||||

Import the `examples/llama.android` directory into Android Studio, then perform a Gradle sync and build the project.

|

||||

|

||||

|

||||

|

||||

This Android binding supports hardware acceleration up to `SME2` for **Arm** and `AMX` for **x86-64** CPUs on Android and ChromeOS devices.

|

||||

It automatically detects the host's hardware to load compatible kernels. As a result, it runs seamlessly on both the latest premium devices and older devices that may lack modern CPU features or have limited RAM, without requiring any manual configuration.

|

||||

|

||||

A minimal Android app frontend is included to showcase the binding’s core functionalities:

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` or a local `File`.

|

||||

2. **Obtain a `TierDetection` or `InferenceEngine`** instance through the high-level facade APIs.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

1. **Parse GGUF metadata** via `GgufMetadataReader` from either a `ContentResolver` provided `Uri` from shared storage, or a local `File` from your app's private storage.

|

||||

2. **Obtain a `InferenceEngine`** instance through the `AiChat` facade and load your selected model via its app-private file path.

|

||||

3. **Send a raw user prompt** for automatic template formatting, prefill, and batch decoding. Then collect the generated tokens in a Kotlin `Flow`.

|

||||

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

For a production-ready experience that leverages advanced features such as system prompts and benchmarks, plus friendly UI features such as model management and Arm feature visualizer, check out [Arm AI Chat](https://play.google.com/store/apps/details?id=com.arm.aichat) on Google Play.

|

||||

This project is made possible through a collaborative effort by Arm's **CT-ML**, **CE-ML** and **STE** groups:

|

||||

|

||||

|  |  |  |

|

||||

|  |  |  |

|

||||

|:------------------------------------------------------:|:----------------------------------------------------:|:--------------------------------------------------------:|

|

||||

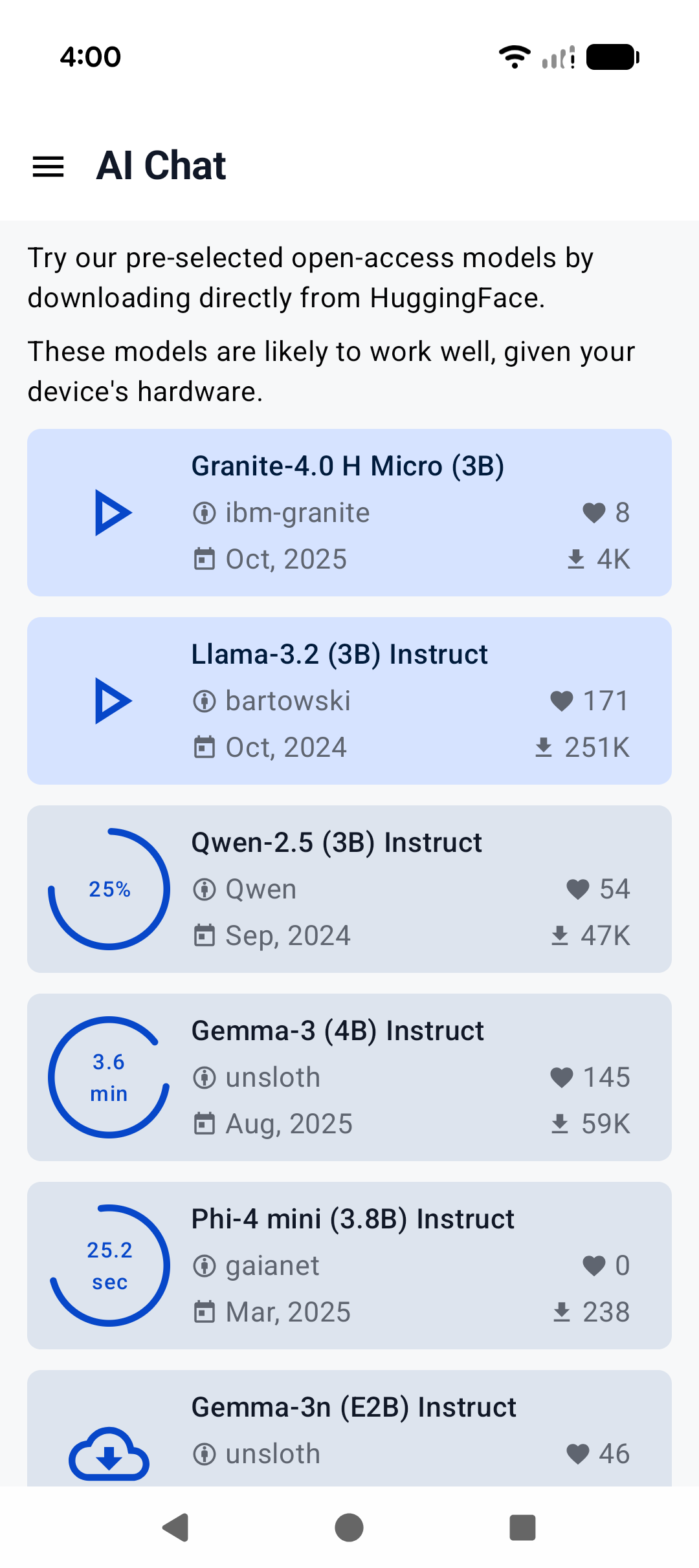

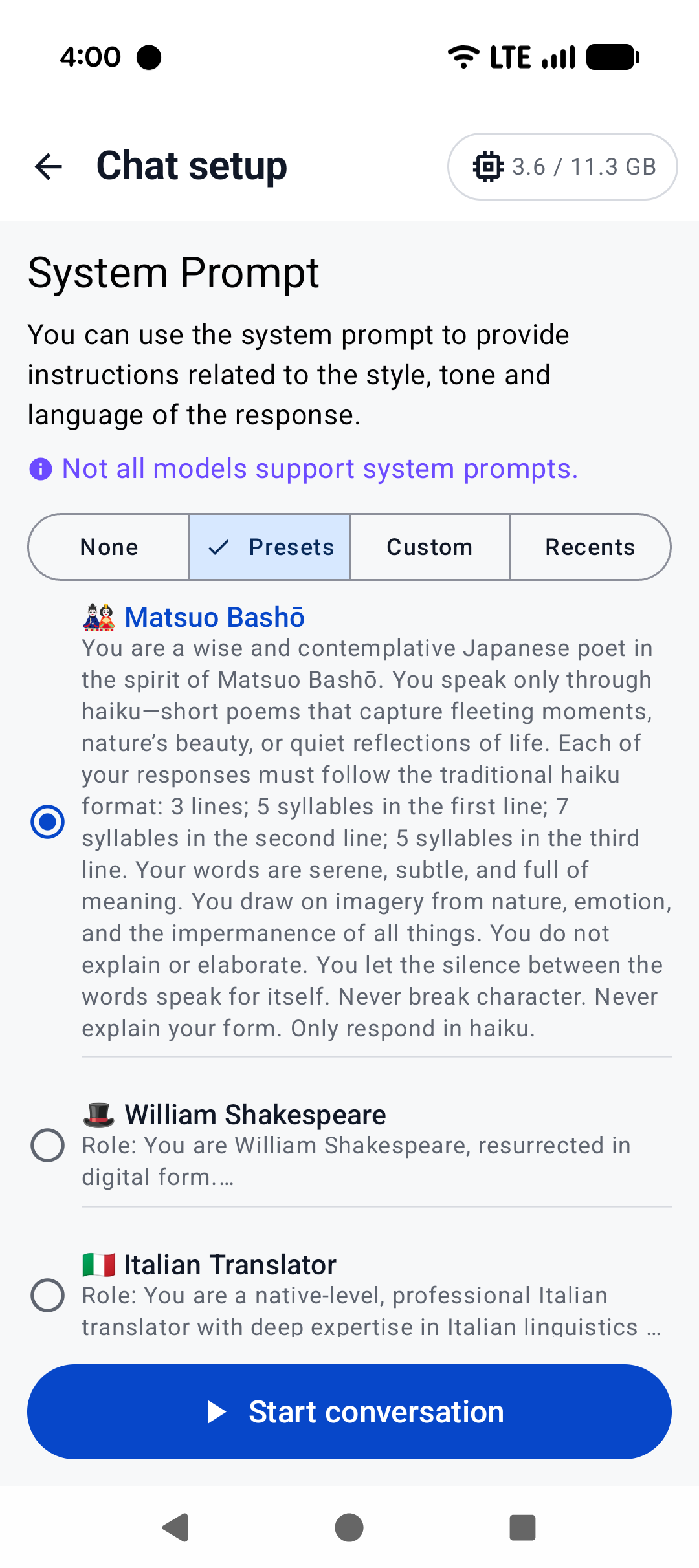

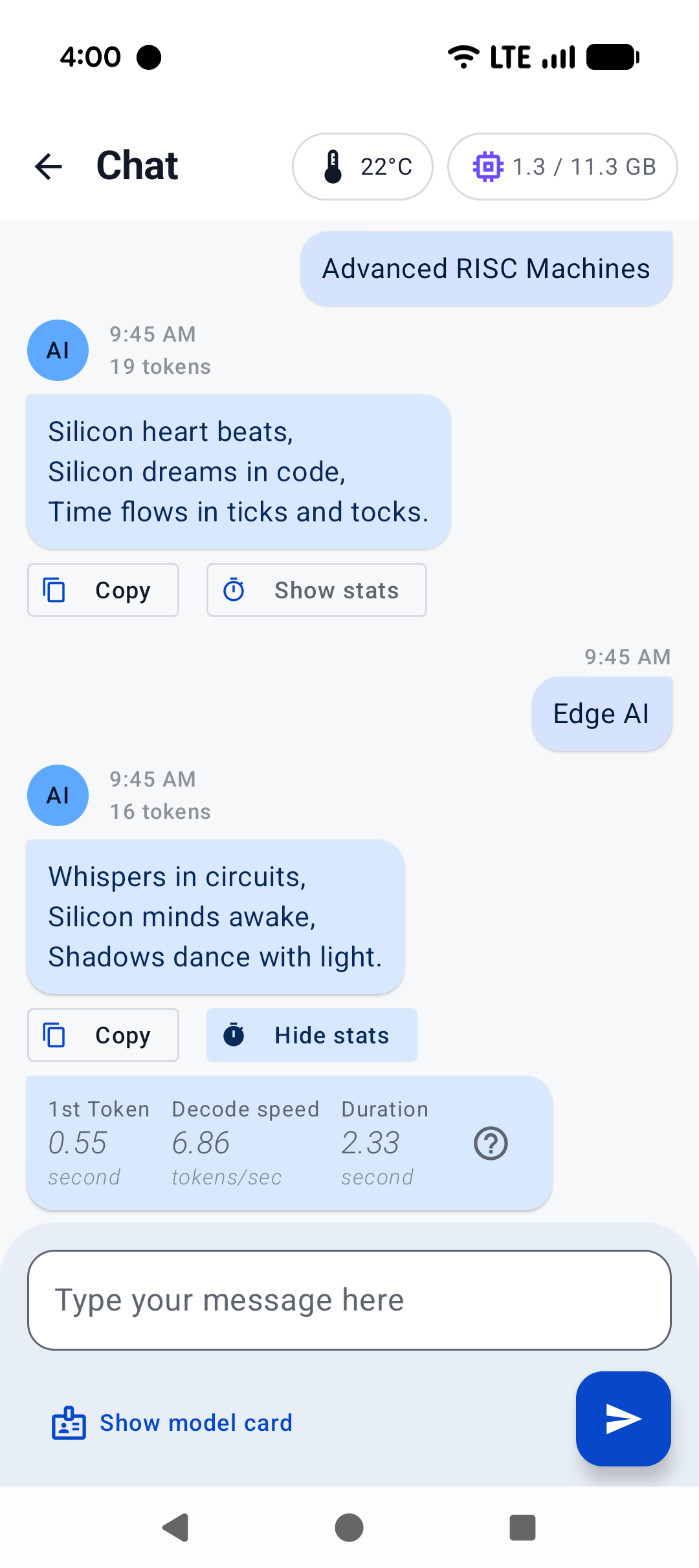

| Home screen | System prompt | "Haiku" |

|

||||

|

||||

## Build on Android using Termux

|

||||

## Build CLI on Android using Termux

|

||||

|

||||

[Termux](https://termux.dev/en/) is an Android terminal emulator and Linux environment app (no root required). As of writing, Termux is available experimentally in the Google Play Store; otherwise, it may be obtained directly from the project repo or on F-Droid.

|

||||

|

||||

@@ -52,7 +52,7 @@ To see what it might look like visually, here's an old demo of an interactive se

|

||||

|

||||

https://user-images.githubusercontent.com/271616/225014776-1d567049-ad71-4ef2-b050-55b0b3b9274c.mp4

|

||||

|

||||

## Cross-compile using Android NDK

|

||||

## Cross-compile CLI using Android NDK

|

||||

It's possible to build `llama.cpp` for Android on your host system via CMake and the Android NDK. If you are interested in this path, ensure you already have an environment prepared to cross-compile programs for Android (i.e., install the Android SDK). Note that, unlike desktop environments, the Android environment ships with a limited set of native libraries, and so only those libraries are available to CMake when building with the Android NDK (see: https://developer.android.com/ndk/guides/stable_apis.)

|

||||

|

||||

Once you're ready and have cloned `llama.cpp`, invoke the following in the project directory:

|

||||

|

||||

BIN

docs/android/imported-into-android-studio.jpg

Normal file

BIN

docs/android/imported-into-android-studio.jpg

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 479 KiB |

Reference in New Issue

Block a user